| 失效链接处理 |

|

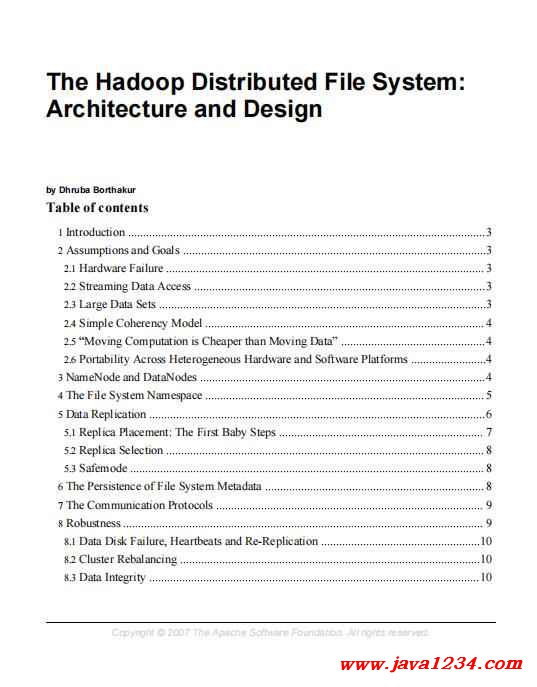

The Hadoop Distributed FileSystem Architecture andDesign PDF 下载

本站整理下载:

相关截图:

主要内容:

2.4. Simple Coherency Model

HDFS applications need a write-once-read-many access model for files. A file once created,

written, and closed need not be changed. This assumption simplifies data coherency issues

and enables high throughput data access. A Map/Reduce application or a web crawler

application fits perfectly with this model. There is a plan to support appending-writes to files

in the future.

2.5. “Moving Computation is Cheaper than Moving Data”

A computation requested by an application is much more efficient if it is executed near the

data it operates on. This is especially true when the size of the data set is huge. This

minimizes network congestion and increases the overall throughput of the system. The

assumption is that it is often better to migrate the computation closer to where the data is

located rather than moving the data to where the application is running. HDFS provides

interfaces for applications to move themselves closer to where the data is located.

2.6. Portability Across Heterogeneous Hardware and Software Platforms

HDFS has been designed to be easily portable from one platform to another. This facilitates

widespread adoption of HDFS as a platform of choice for a large set of applications.

3. NameNode and DataNodes

HDFS has a master/slave architecture. An HDFS cluster consists of a single NameNode, a

master server that manages the file system namespace and regulates access to files by clients.

In addition, there are a number of DataNodes, usually one per node in the cluster, which

manage storage attached to the nodes that they run on. HDFS exposes a file system

namespace and allows user data to be stored in files. Internally, a file is split into one or more

blocks and these blocks are stored in a set of DataNodes. The NameNode executes file

system namespace operations like opening, closing, and renaming files and directories. It

also determines the mapping of blocks to DataNodes. The DataNodes are responsible for

serving read and write requests from the file system’s clients. The DataNodes also perform

block creation, deletion, and replication upon instruction from the NameNode.

|

苏公网安备 32061202001004号

苏公网安备 32061202001004号